- PagerDuty /

- Blog /

- Automation /

- Unlocking the Value of your Runbook Automation Value Metrics with Snowflake, Jupyter Notebooks, and Python

Blog

Unlocking the Value of your Runbook Automation Value Metrics with Snowflake, Jupyter Notebooks, and Python

This blog was co-authored by Sebastian Joseph, Senior Solutions Consultant, PagerDuty, and Gabriel Ismael Felipe, Automation Engineer

Automation has become an integral piece in business practices of the modern organization. Oftentimes when folks hear “automation,” they think of it as a means to remove the manual aspect of the work and speed up the process; however, what lacks the spotlight is the value and return automation can offer to an organization, a team, or even just one specific process.

Understanding the value derived from automated jobs and return on investment (ROI) from these automated processes is a critical metric for value realization, be it in terms of time saved, errors avoided, risk mitigation, number of executions, etc.

With the PagerDuty Operations Cloud, teams are empowered to build, centralize and execute automation at will, without having to context switch or make any drastic toolset changes, largely due to our vast 700+ integration ecosystem. In this blog, we are going to zoom in on two key integrations, Snowflake and Jupyter Notebooks. So how can you collect automation-related ROI information in Pagerduty Process Automation, and create reports that capture this value? Let’s get into it.

The Automation Spiral Paradox

Technology teams building and innovating on a daily basis often get stuck in the “death spiral” of automating operations and processes. The conversation will typically unfold like this:

- “We are too busy to automate.”

- “Why are we too busy to automate?”

- “We have all these tasks!”

- “Why don’t we automate those tasks?”

- “We’re too busy to take the time to automate those tasks!”

By showing the ongoing value of automation to the extended team, we can break this cycle and show that we can’t afford not to automate this toil.

In this blog, we’ll dive into two different ways to do this:

- An API integration of Pagerduty Automation and Snowflake–how to use an API based integration of Pagerduty Automation and Snowflake to capture the ROI data for each job run, and building a dashboard with this data in Snowflake to showcase the ROI value from automation

- Build further integrations or custom reports using Jupyter Notebooks

Unlocking the Value of your Runbook Automation Value Metrics (ROI) with Snowflake

Why Snowflake?

A large number of organizations use Snowflake as their data lake, and the dashboard tool in Snowflake can capture & represent the value of automation. By utilizing Snowflake and the PagerDuty Operations Cloud together, organizations can improve their data operational efficiency, minimize data downtime, and establish real-time communication with stakeholders for effective data management.

SNOWFLAKE Setup:

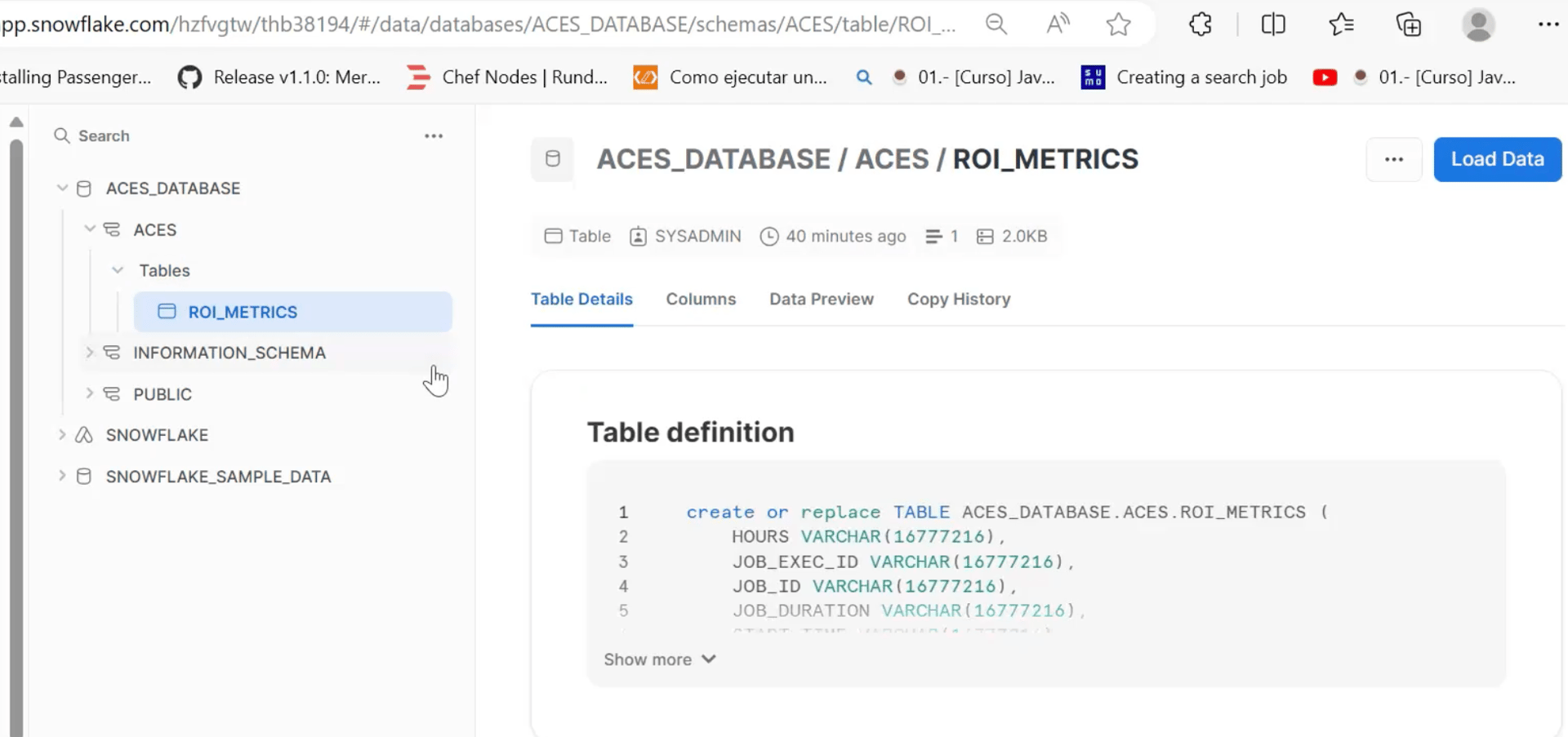

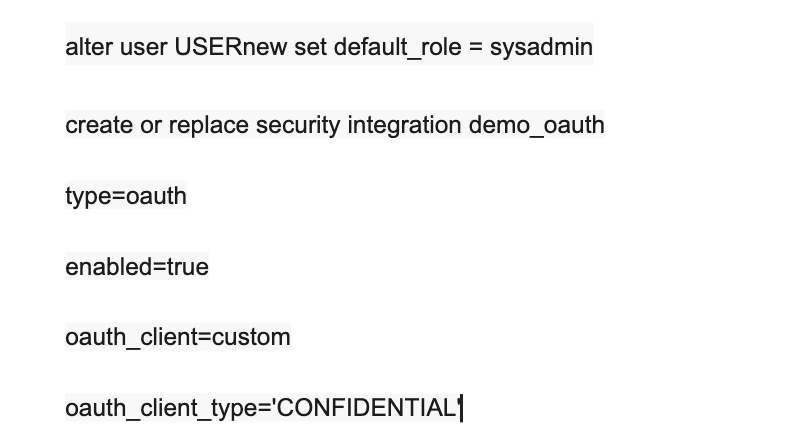

Let’s first define the Security integration in Snowflake:

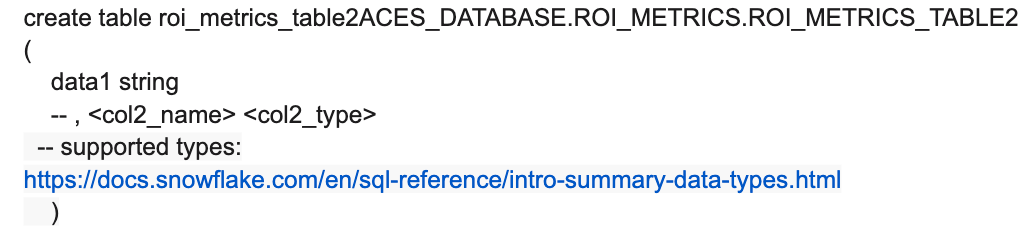

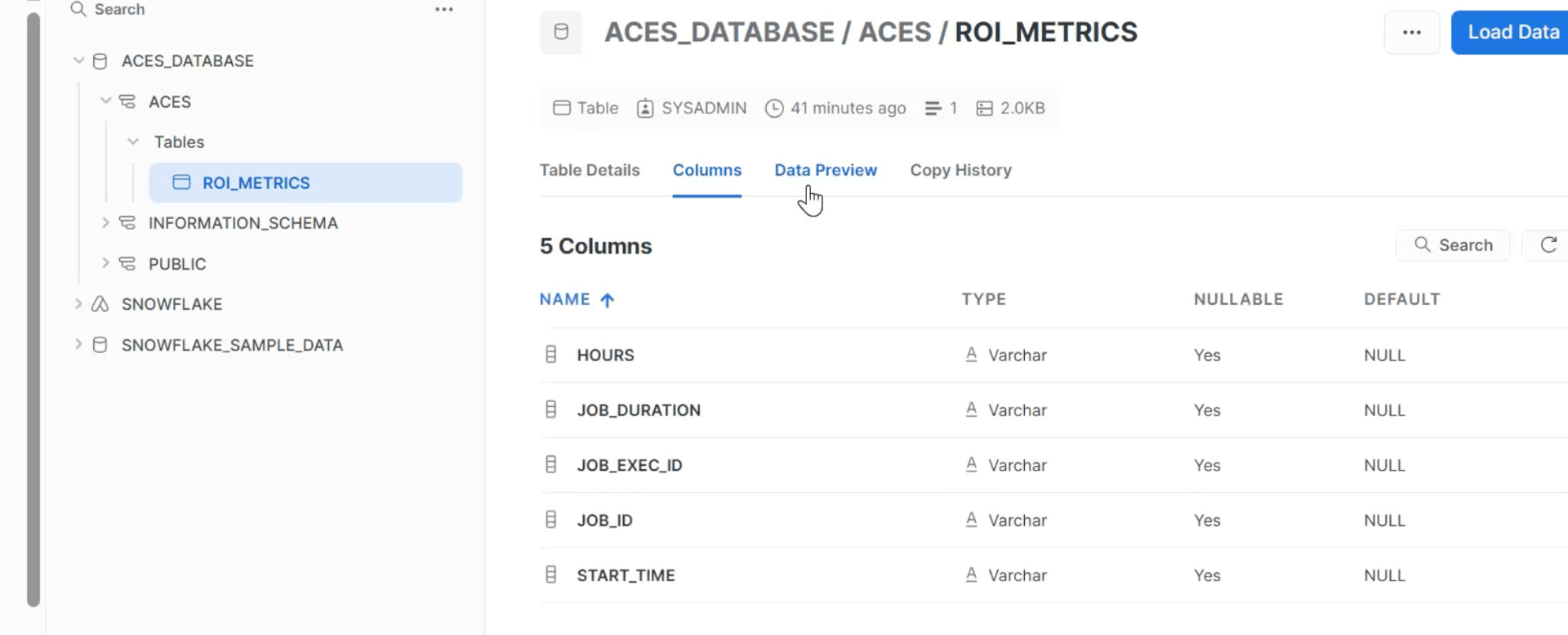

Then we can DEFINE the WORKSHEET Table & FIELDS in Snowflake. Some sample snapshots and steps are down here:

PagerDuty Process Automation setup steps:

Tag a job for measurement and the values in time or revenue attached in your automation job.

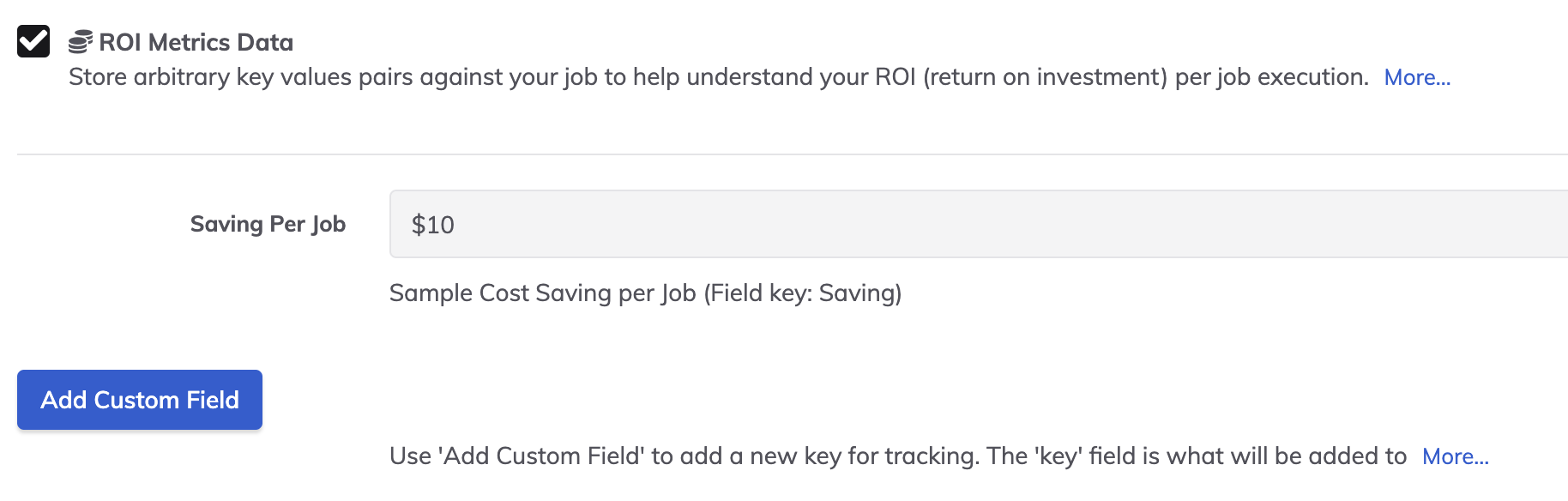

Each individual job may have its own metric or metrics. For this example, let’s assume the job saves 10 minutes of toil for a mid-level DevOps team member, equating to $10 per execution.

Further information detailed in the PagerDuty Runbook Automation documentation.

Detailed steps:

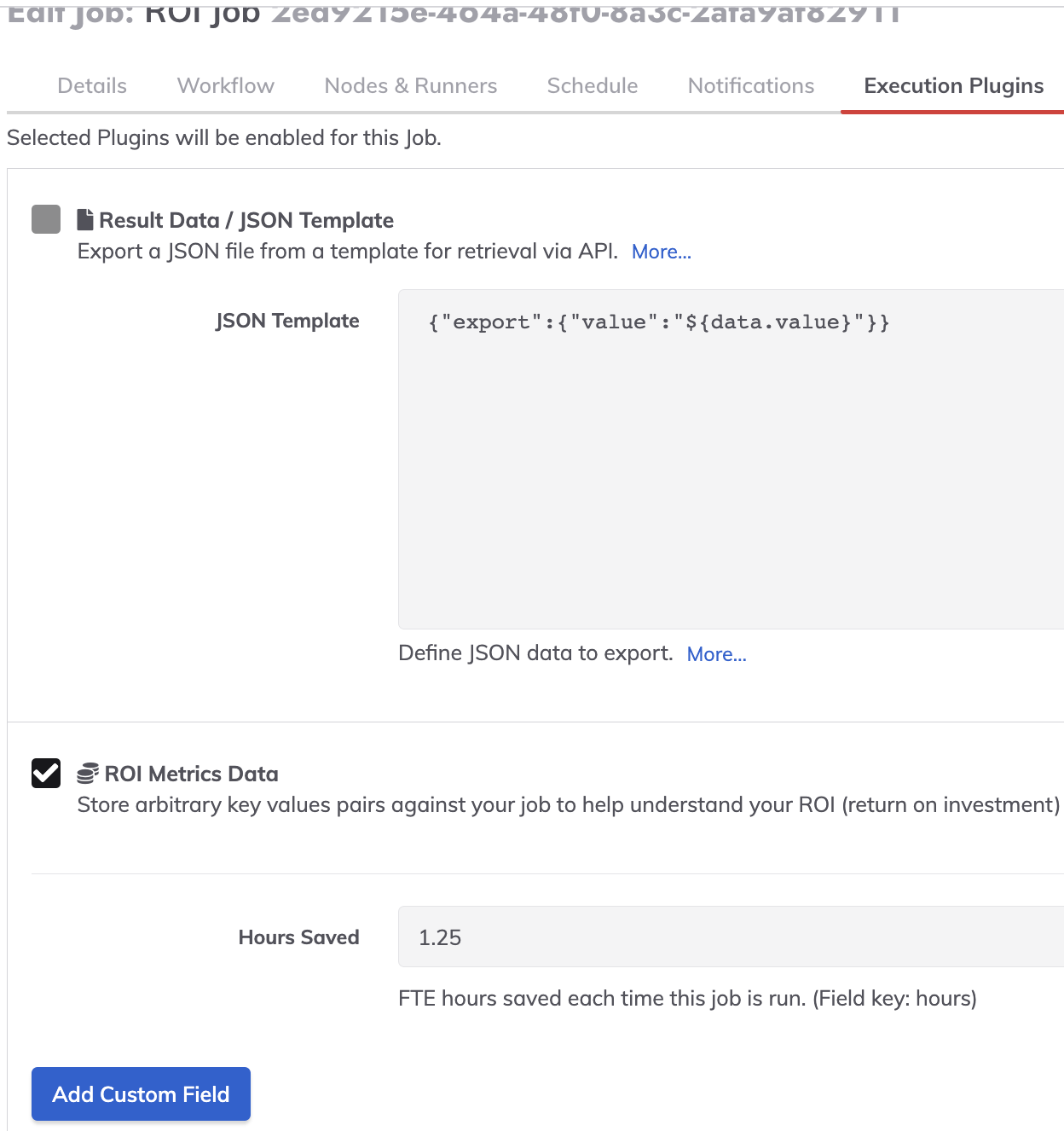

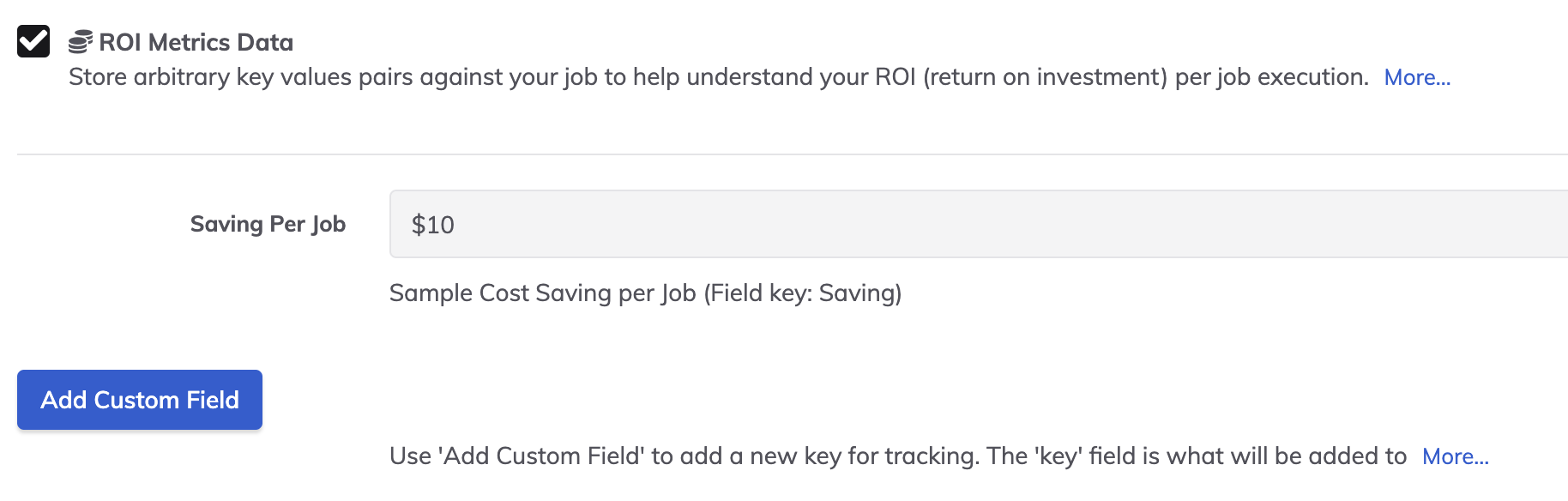

We use the “ROI Metrics” plugin available in Job definition to capture the “Hours Saved” data when an automation job is run. This has to be manually set/defined for the job based on time saved using automation.

In the Edit More of the Job in Process Automation, check the “ROI Metrics Data” flag. (Edit job -> Execution Plugins -> Check ROI Metrics Data (with a valid ‘Hours Saved’ info filled in, example: 1.25 hours).

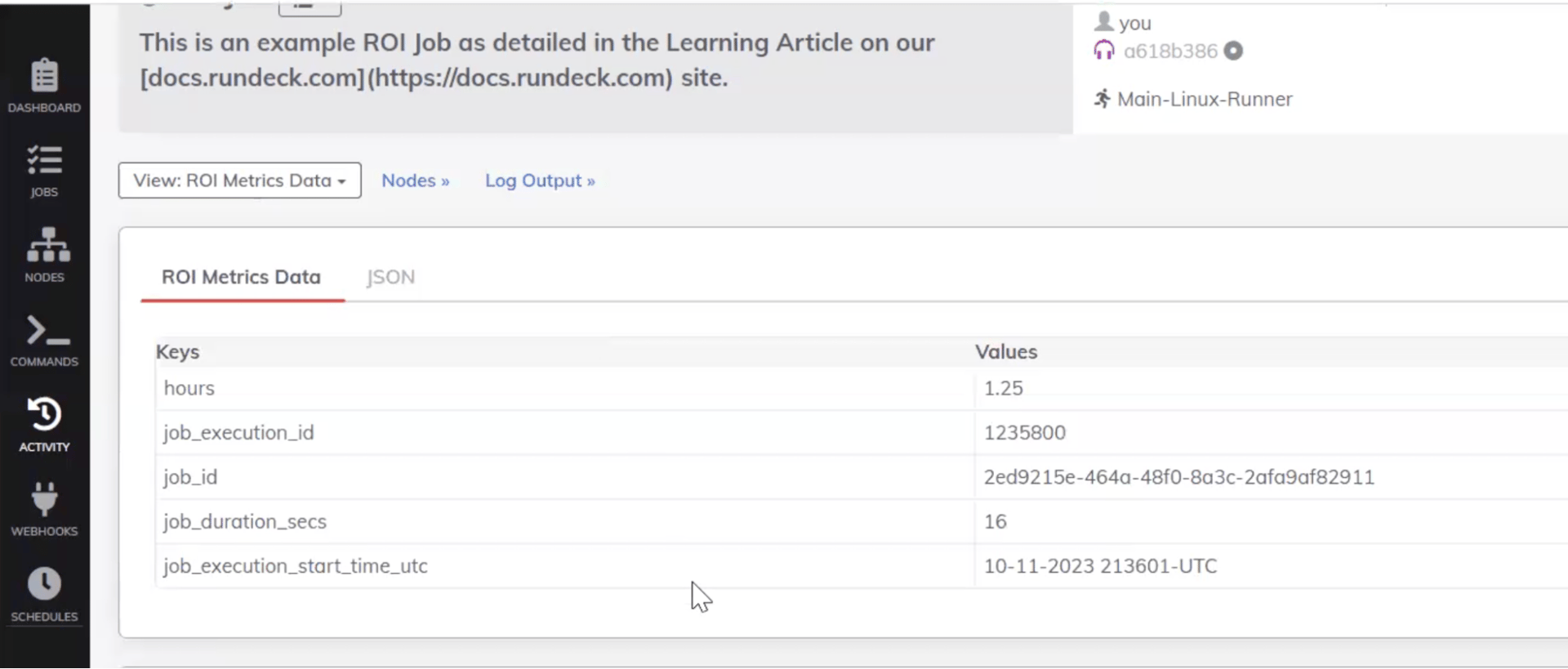

The above will provide the following ROI Metric Data output when the job executes:

Then, post the key-value ROI data collected in PA job step to a database defined in Snowflake using an HTTP Method: Post, and the embedded SQL statement.

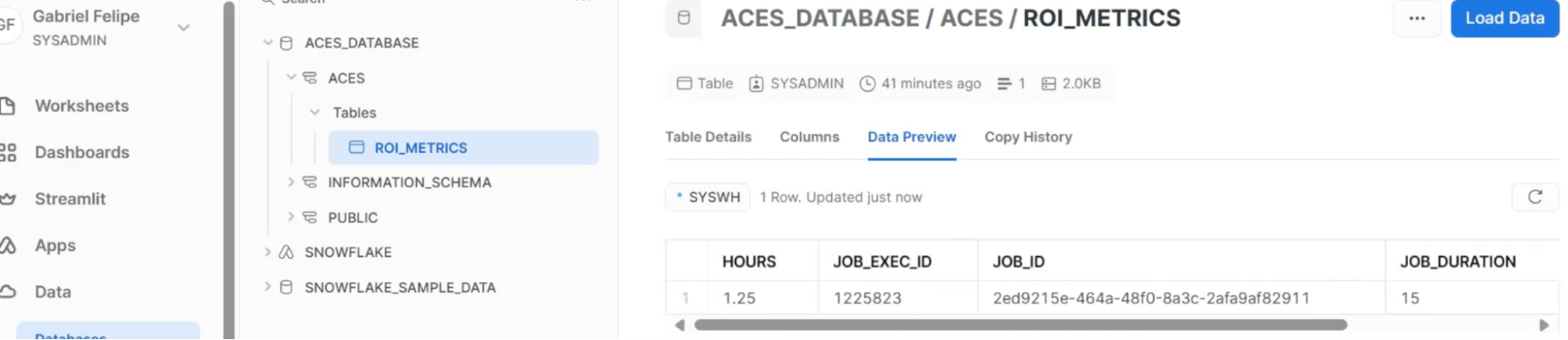

Output when posted in Snowflake (for each execution of the job a row will be added with the metric):

Runbook Automation Value Metrics (ROI) using Jupyter Notebook:

Why a Jupyter Notebook?

Jupyter Notebooks offer an interactive computing environment that enables users to combine code, markdown text and visual outputs. This makes it ideal for data analysis, sharing insights, and even distributing software tools. Let’s dive into a Python script designed to extract ROI metrics via the Runbook Automation API. We’ll also discuss how to distribute this tool as a Jupyter Notebook along with a .env file for easy configuration.

The sample code can be modified or extended to suit your own environments, notebooks, business intelligence (BI) tools or scheduled scripts. The approach can also be modified to your preferred language.

Before you start:

Jobs will need tagging to collect its own ROI Metrics (described here).

Each individual job may have its own metric or metrics. For this example let’s assume the job saves 10 minutes of toil for a mid-level DevOps team member, equating to $10 per execution.

Further information detailed in the Runbook Automation documentation

Core Concepts

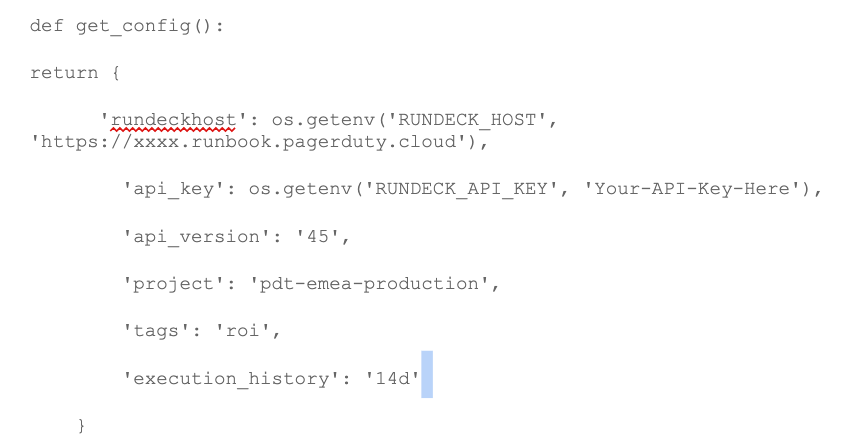

Configuration and Environment Variables

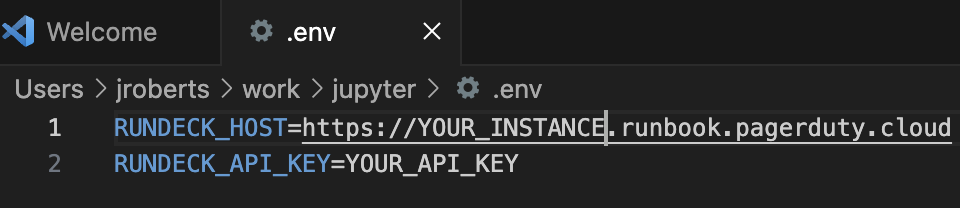

The .env file serves as the basis of our script’s configuration. By distributing this file along with the Jupyter Notebook, users can safely set up their environment, and externalize sensitive features like the API key required.

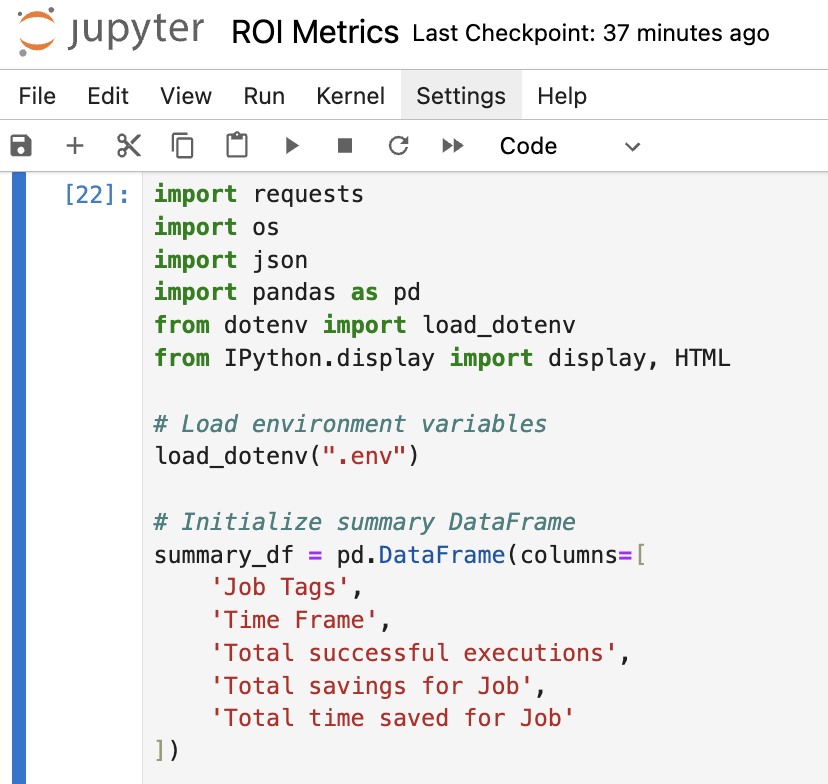

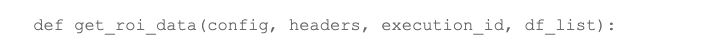

Allowing reference the configuration data in the notebook:

You can easily modify the notebook for the project, tags and history (eg. 1h, 24h,1d,14d).

Authenticating and Fetching Job Data

Authentication is via a bearer token, generated in Runbook Automation, and stored in the environment file for safety.

Once authenticated the flow within the code looks like this:

- Utilizing the Runbook Automation API, the script fetches a list of jobs based on specific tags. This return a list of Job IDs per tag

![]()

- For each ID, we look at the execution list for the Job, returning a list of execution IDs

![]()

- Finally we iterate through the executions, to return the ROI data

Data Aggregation and Analysis

All the data we collect is stored in a Pandas DataFrame, which makes subsequent analysis and reporting straightforward. Due to the data iteration, some larger datasets may take a while to process.

Summarizing the Metrics

Some modifications are required to treat output values as time or Int values, but the supplied code should be self-explanatory.

For the example we also added the total running time of each successful job, in case any sort of comparison was required “against a manual execution of a job or jobs”

Potential Improvements and Future Modifications

- Error Handling: As of now, the notebook lacks in-depth error handling, which would make it more robust.

- Pagination: Currently, the script retrieves up to 1,000 execution records. Implementing pagination would allow it to handle larger datasets.

- Data Visualization: Incorporating graphical representations of the data would provide more actionable insights.

- Modify to run multiple jobs, and store in external BI platform.

Conclusion and Next Steps

1. This Jupyter Notebook, serves as a powerful tool for gathering ROI metrics and evaluating your automated processes. It works as-is, and with a few enhancements, this notebook can serve as an indispensable asset in your automation toolbox.

2. More enhanced ROI metrics can be collected and a dashboard depiction of the outcomes can be built using further integration of Jupyter notebooks & Snowflake. Jupyter Notebook is used for interactive computing using Python.

3. Snowflake can be used to capture these ROI snapshots and roll up values across multiple environments, as well as the display of ROI data across longer timeframes. The required steps to perform query using Python via Jupyter Notebook and work with Snowflake:

- Connect Jupyter Notebook to Snowflake ( you can do this using SnowCD and Python Connector, as illustrated in the following example: (https://blogs.perficient.com/2020/06/06/integrating-jupyter-notebook-with-snowflake/)

- Once this is done, you will be able to create tables, load data and perform queries using Python via Jupyter Notebooks.

4. Now you have access to this data, it can be used as a proof point to senior management on the value of automation, a daily digest to your automation users, or form part of the value you return to customers in a managed service environment.

Our Services teams are also able to help with any specific data tasks and customisation.

What’s next?

PagerDuty Automation will continue to augment our ROI capture capabilities in our automation platform. The objective is to showcase the various ways in which automation caters to efficiency, risk mitigation, cost savings and other aspects towards which automation delivers value. We will keep this blog updated as these capabilities roll out.

One powerful way to showcase the impact of automation, and bring automation to the forefront of digital transformation across the organization is by broadcasting the ROI data and dashboards to management so they can understand and realize the value automation delivers. Data speaks for itself, and PagerDuty is making it easier to capture relevant data in the automation platform every time automation runs.

Have fun!