Cassandra Load Disparities with WAN-based Quorum Reads

Here at PagerDuty, we face some interesting architectural challenges in order to guarantee alert delivery and provide our customers with the highest level of reliability possible. We have in the past declared that if you receive a 200 OK from the PagerDuty event endpoint, you will be paged… even if the datacenter you communicated with blinks out of existence immediately following your request. In order to keep that guarantee, we must synchronously write the event to multiple datacenters. We use Apache Cassandra to accomplish this and, as a result, rely heavily on majority quorum reads and writes.

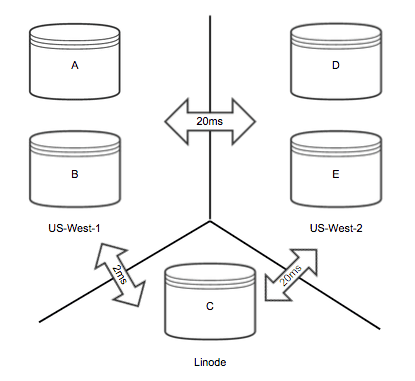

With such guarantees in place, we have some unusual requirements of our Cassandra deployments. A typical Cassandra deployment at PagerDuty consists of five nodes spread across three datacenters, with a replication factor of five. This configuration works nicely for highly available geo-distributed quorum reads and writes, and we have written about it before, though we are not here to talk about that today. Instead, we will discuss something slightly more interesting: deterministic read hotspots, despite perfectly even data distribution and uniform access patterns!

Those boxes just run hot…

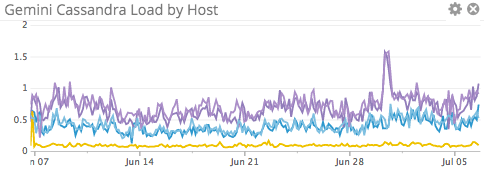

We have for some time noticed that Cassandra hosts in one of our datacenters (AWS us-west-1) run hotter than the rest. We had also come to realize that the hardware in that datacenter was slightly older and less efficient than that of the other two. Seemed pretty straight-forward: weaker hardware in that DC was resulting in elevated load when compared to the somewhat better hardware in the rest of the ring. One of the hosts in the ring has more cores than the others (located in Linode), and is subsequently the least loaded, which reinforces the hardware hypothesis. Here’s a graph of 5-minute load across all the hosts in one of our Cassandra rings:

You can see clearly the ‘stronger’ box at the bottom with the least load, the ‘weak’ us-west-1 hosts at the top, and the less-weak us-west-2 hosts in the middle. Occam’s Razor, right? Not so fast.

More Data, More Visibility

With the sane and sound hardware hypothesis in place, we felt comfortable and saw no need to investigate further. Some time went by, we grew, and so did our Cassandra load. It was time to start optimizing our Cassandra operations. The first thing required in order to properly tune a Cassandra cluster is, of course, MOAR DATA!

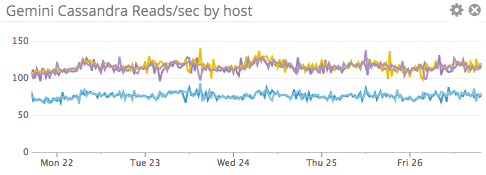

A Cassandra upgrade provided us with more metrics than we knew what to do with. We began building new dashboards to see if we could find anything interesting. There were several surprises, but one in particular defied explanation: while writes were evenly distributed across all nodes, read load was asymmetric. Further investigation showed this pattern to be present in several different services with similar topology.

Three hosts were consistently receiving more reads than the other two. This was surprising because read load entering the system was symmetric, and with a replication factor equal to the cluster size, all things should be even. Taking a look at the topology, it became apparent that the more heavily loaded hosts were all in us-west-1 and Linode. Discounting Linode as an outlier (due to more CPU cores), it was clear that the hardware hypothesis was incorrect – those hot nodes were indeed busier than the rest.

Cassandra Read Ops vs Write Ops

We perform quorum writes, though due to our high replication factor, all nodes must write the data. While the coordinator needs only a majority acknowledgement to succeed, the write is still dispatched to everyone. We also perform quorum reads, but unlike writes, the read is only dispatched to the minimum number of nodes required. In our case, that number is three… very suspicious.

The coordinator is responsible for selecting the nodes it wishes to read from. Turns out that it selects the nodes which have been responding the fastest. Certainly the slightly slower hardware is not outperforming, so what gives?

RTT is a Thing

Since our Cassandra clusters are geographically distributed, inter-node communication takes a bit of time. Exactly how long is determined by the source and destination, as the relationships are not equilateral. Here’s a diagram demonstrating our topology, and the approximate round-trip latencies between them:

If we assume for a minute that all members perform equally well in terms of read latency, we can begin to work out the probability that a particular node will receive a read request from a given coordinator. So, for every node in the ring, we find the closest neighbors (and thus the fastest responses). Using labels [A-E] as assigned in the previous diagram, we can generate a table:

| Coordinator | Neighbors | A prob. | B prob. | C prob. | D prob. | E prob. |

|---|---|---|---|---|---|---|

| A | A, B, C | 1 | 1 | 1 | 0 | 0 |

| B | A, B, C | 1 | 1 | 1 | 0 | 0 |

| C | A, B, C | 1 | 1 | 1 | 0 | 0 |

| D | D, E, (A,B,C) | .33 | .33 | .33 | 1 | 1 |

| E | D, E, (A,B,C) | .33 | .33 | .33 | 1 | 1 |

With this table in hand, we can see that nodes D-E will receive a read only 2/5ths of the time, meaning that nodes A-C end up receiving about 60% more reads than their counterparts!

Real-World Read Distribution

In practice, we are not too far off. Eyeballing the read operations graph, the us-west-2 nodes do on average 75 reads/sec, while the others are seeing about 115-120 reads/sec – shockingly close to 60% more! We see this shift around a little bit as routes pop and local read latencies change, but even with those variables in play, we remain remarkably close to the predictions made by the distribution table.

Learnings and Take-Aways

Now that we understand all of this, the natural question to ask is, ‘Well, what can we do about it?’ Moving datacenters so that they are equally distant from each other network-wise would be a futile attempt as internet routes can easily change. Distributing our reads unevenly to more heavily lean on D-E as coordinator nodes would incur an unnecessary performance penalty as clients in other datacenters would have to traverse an internet route in order to reach the cluster. Alas, it seems that the most appropriate response is to simply scale up the A-C nodes and absorb the load. This can be problematic if we were to lose a datacenter, but is manageable so long as the remainders are not under-provisioned for such a case.

This was a very interesting learning for us. The answer now seems obvious, but it serves as an example of just how easy it is to make assumptions about your systems that simply don’t hold in real life. It is also worth noting that this is not a Cassandra-specific behavior. Any geographically distributed system which dispatches work to hosts with the lowest response time will suffer from this phenomenon. Something to keep in mind if you ever find yourself building a system with similar characteristics… hopefully we have saved you some time :).

Many thanks to all the PagerDuty Engineers who took their time to get to the bottom of this, this blog post wouldn’t be possible without them! Be sure to check out our talks from Cassandra Summit on this topic and more, by Donny Nadolny and Paul Rechsteiner.