- PagerDuty /

- Blog /

- Reliability /

- Standing on the shoulders of giants and stumbling with them – the Amazon AWS outage’s "pain" statistics

Blog

Standing on the shoulders of giants and stumbling with them – the Amazon AWS outage’s "pain" statistics

Today, at around 1am Pacific Time, Amazon began having major problems with some of their cloud infrastructure: specifically with their EC2, EBS, and RDS offerings. The issues are ongoing, and many of your favorite internet sites or services are probably still down or at reduced functionality because of it.

Today, at around 1am Pacific Time, Amazon began having major problems with some of their cloud infrastructure: specifically with their EC2, EBS, and RDS offerings. The issues are ongoing, and many of your favorite internet sites or services are probably still down or at reduced functionality because of it.

This kind of outage is one of PagerDuty’s big “moments”; when a big chunk of the services on the internet say: “Hey PagerDuty, I’m down, so wake someone up to fix me!”

There’s lots of coverage on this issue already out there, so we won’t go into much detail on the AWS situation itself. But we’d like to share some statistics on the alerts we sent out – via phone or SMS – during the outage. We think these numbers could shed some light on what proportion of the internet was affected by the issues. We don’t presume that we’re being used (yet!) by a “huge”, “moderate”, or even “realistically statistically significant” proportion of internet websites or SaaS providers, but we think these numbers are definitely interesting and can be taken on the whole as a sort of pain metric for this AWS outage.

Since the outage began, we have routed notifications to around 36% of our customer base. In other words, 36% of PagerDuty customers have been having issues – ones big enough to actually page one of their sysadmins or engineers to work on the problem – since the AWS problems began.

Most PagerDuty customer accounts have more than one user – sysadmin, engineer, “ops guy”, etc – involved in their on-call rotations. We have paged more than 10% of our entire user base. In other words, more than 10% of all of our customers’ operations staff have been woken up and/or called in by our systems to work on their problems. This is probably just the tip of the iceberg as well, since we usually just handle the first alert; these AWS problems are probably causing a lot of “all-hands-on-deck”-type situations where the entire ops teams (and more) will be called in to fight fires after the on-call has been woken up by PagerDuty.

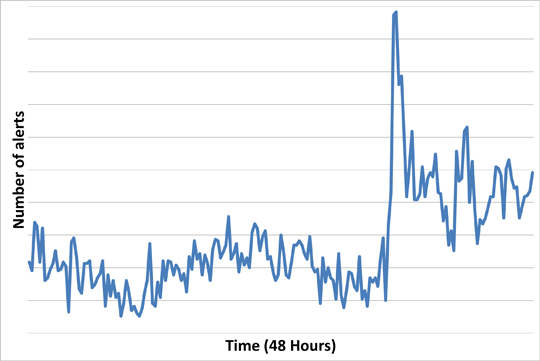

Below is a graph of the number of alerts – phone, SMS, and email – that we have sent out over the last 48 hours. There was a big spike in outgoing alerts around the time of the AWS outage, and alert levels have remained high since.

PagerDuty outgoing phone/SMS/email alerts during AWS outage

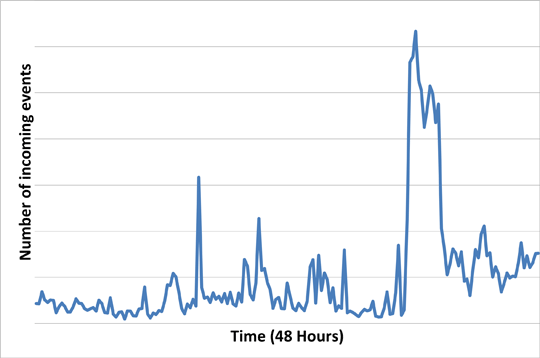

Below is a graph of the number of “events” being sent to PagerDuty by our customers’ monitoring systems, through our API or through email. We don’t send out phone/SMS/etc alerts for every “event” that gets sent to us by monitoring systems, but we de-duplicate them so as not to overwhelm our already-harassed and bleary-eyed users. As you can see, we were flooded by a huge number of events when the outage first began, and incoming event levels are still high.

PagerDuty incoming events during AWS outage