- PagerDuty /

- Engineering Blog /

- Live Updating in Mobile Applications

Engineering Blog

Live Updating in Mobile Applications

Background

For many modern-day apps, the user interface either automatically shows the most up-to-date content to its users, or presents an indicator that new content is available. For responders and stakeholders, having the most up-to-date information is important, and at times crucial.

Repeatedly pulling down a view to refresh is wasteful and distracts you from the issue at hand. We wanted to improve the experience by automatically updating the view when something changed on the server.

Unlike other product enhancements like schedules and other interfaces to an existing API, this data wasn’t available to the mobile team in the way we wanted it.

There’s more than one way to skin a cat. When it comes to implementing auto-updates for your app, there’s several ways to go about it including polling, leveraging third-party sync, push notifications, and WebSockets.

Weighing the Options

Polling

The first option that comes to mind is polling, where we automatically query the server periodically behind the scenes. This is really a last resort, as updates are likely to happen in between polling intervals, and mobile data is expensive in some markets. On the plus side, developing this solution wouldn’t take anything but adding a timer.

Leveraging third-party sync

If we used a technology like Realm Object Server or Couchbase Lite and simply bound our views to the local database, we could rely on the client automatically syncing to the server without writing the sync ourselves. This would still require quite a bit of development:

- modify app to bind to database (weeks of effort)

- implement and provision third-party server

- integrate server with existing infrastructure

The more we thought about it, the less appealing it sounded. While we’d benefit from having a local database and binding the views, the risk of vendor lock-in and the uncertainty in implementing a gateway to a third-party server were red flags.

Push Notifications

If we could send a push notification every time an incident changed, but with a special payload that indicates it’s non-visual, that could work. But there are a couple problems (of varying degrees) with that:

- push notifications can be slow to deliver

- push notifications are still delivered after a reconnection if the device had no Internet connection when delivery was attempted, meaning the device would receive push notifications after it’s relevant (though these can be filtered out with a timestamp)

WebSockets

Most of your favourite apps update themselves with WebSockets, which are persistent connections between your mobile device and the server. Messages can be transmitted in text or binary both ways. The time between the event and the device becoming aware is constrained only by our infrastructure and the network speed.

If we could just establish a WebSocket connection to a server and receive a message each time an incident is created or updated, that would be ideal. This would require some development as well:

- modify app to maintain a WebSocket connection to the server

- build a WebSocket server that tells the client when an incident is created or updated

This last task is an unknown, without examining our existing infrastructure to determine if such a server can currently know that an incident is created or updated.

Enter Apache Kafka.

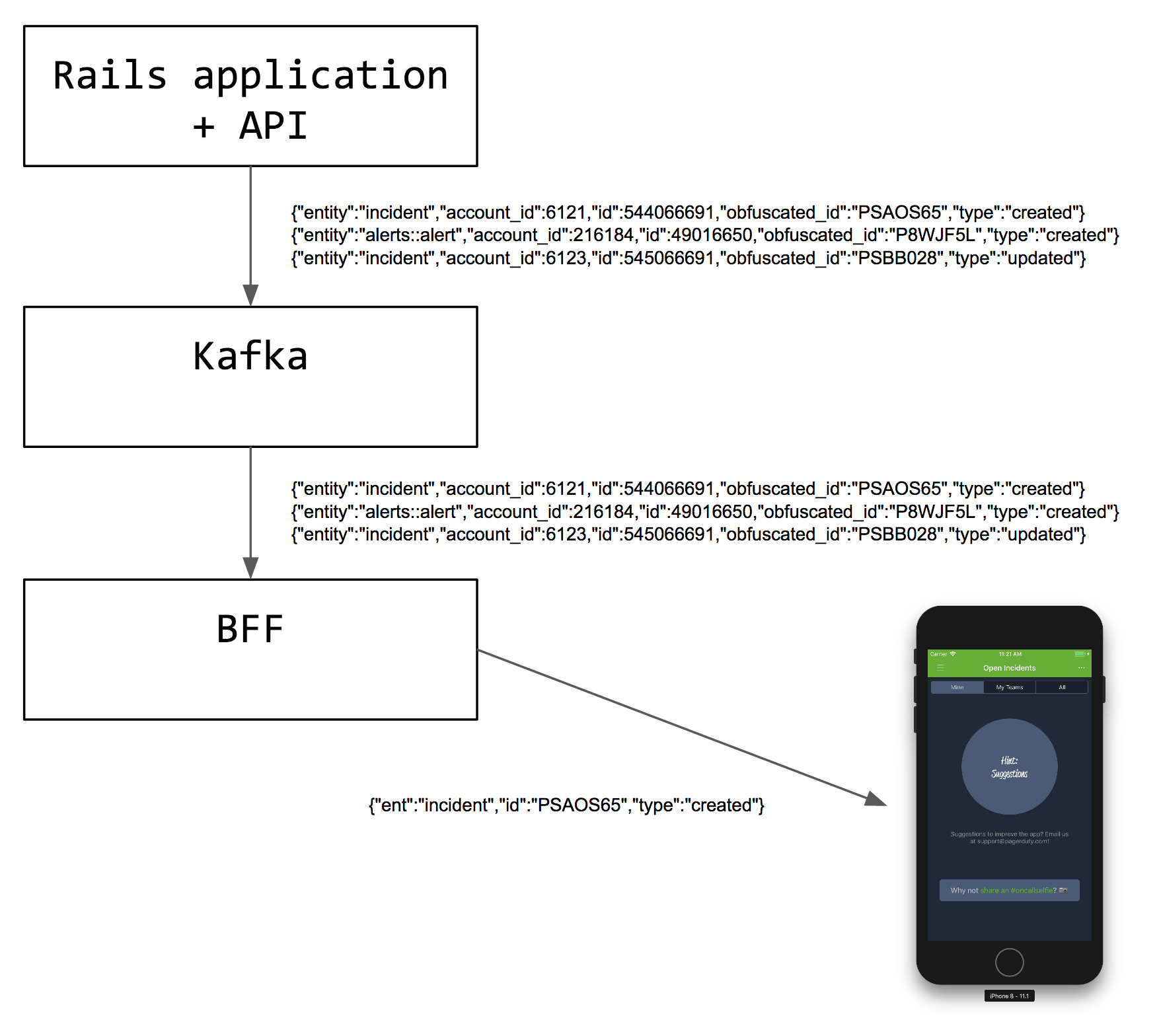

While PagerDuty uses a persistent store for all the data we need to keep, our different services communicate through Kafka topics. Luckily, as part of work that was already done to make the PagerDuty web application auto-update, there is already a topic in Kafka that receives messages when the Rails app saves an incident. (This same code is exercised by the API.)

Now that we’ve determined that the data exists and is available we made the decision to build a WebSocket server.

Proof-of-Concept

Before embarking on a large engineering initiative, it’s a good idea to prove that it’s going to work. There was no doubt that we could build the WebSocket server, having done similar work in the past, but the client-side implementation was an unknown.

We quickly implemented a WebSocket server in Python that periodically sent fixed event data. Then we added a WebSocket client into the iOS app, connected to the server, and broadcast the message to the Incident Details view controller, which refreshed upon receiving the message for the incident in the event.

The Proof-of-Concept was built in a couple days and worked very well, leaving us confident that the entire system could be built.

Server-Side Implementation

Before adding a WebSocket endpoint for the client to connect to, we need a server, which involves some design and provisioning. Luckily, we’ve already got a service for mobile applications called the “Mobile BFF”, where BFF is a Backend for Frontend. It’s written in Scala and uses the Akka HTTP library for reactive streaming. Ideally we’ll just take requests for WebSockets, pass them off to a new controller, which will stream events from Kafka through to the phone.

But, like, how?

Akka Streams is the foundation of our approach. Our BFF server becomes a consumer to Kafka (a Source) using Akka Streams Kafka. When a request for a WebSocket comes in from the mobile device, we authenticate the request, determine which account it’s for, and then create a Flow which is essentially filtering the messages which apply to that account. We apply some transformations (filter out some messages, shorten the JSON to save data) and then Sink it into the WebSocket.

request.header[UpgradeToWebSocket] match {

case Some(upgrade) =>

val textEventSource = source

.filter { event =>

event.entity != "alerts::alert"

}

.map { event =>

TextMessage(write(transformer.transform(event)))

}

upgrade.handleMessagesWithSinkSource(Sink.ignore, textEventSource)

case None =>

Future.successful(HttpResponse(BadRequest, entity = "Client did not request a WebSocket."))

}

The appeal of this approach is that everything in the process works with streams – the Kafka consumer, the filtering, the transformation, and the WebSocket itself.

The net result of this is quite beautiful:

rwalberg$ npx wscat -c http://localhost:8080/live/v1 -p 13 -H "Authorization: Bearer <redacted>"

connected (press CTRL+C to quit)

< {"ent":"incident","id":"PAM4FGS","type":"created"}

< {"ent":"incident","id":"PAM4FGS","type":"updated"}

>

In the future, if we choose to enable two-way communication between the client and the server, we can also treat the WebSocket as a Source and handle incoming messages.

Client-Side Implementation

iOS

On the iOS end we chose to use the excellent Starscream library. It’s always up-to-date with Swift, performs its I/O in the background, and has a really elegant API.

We close the socket when the app is backgrounded, and open it again when it’s foregrounded. If we’re unable to connect the WebSocket, the app will use exponential backoff to reconnect over time.

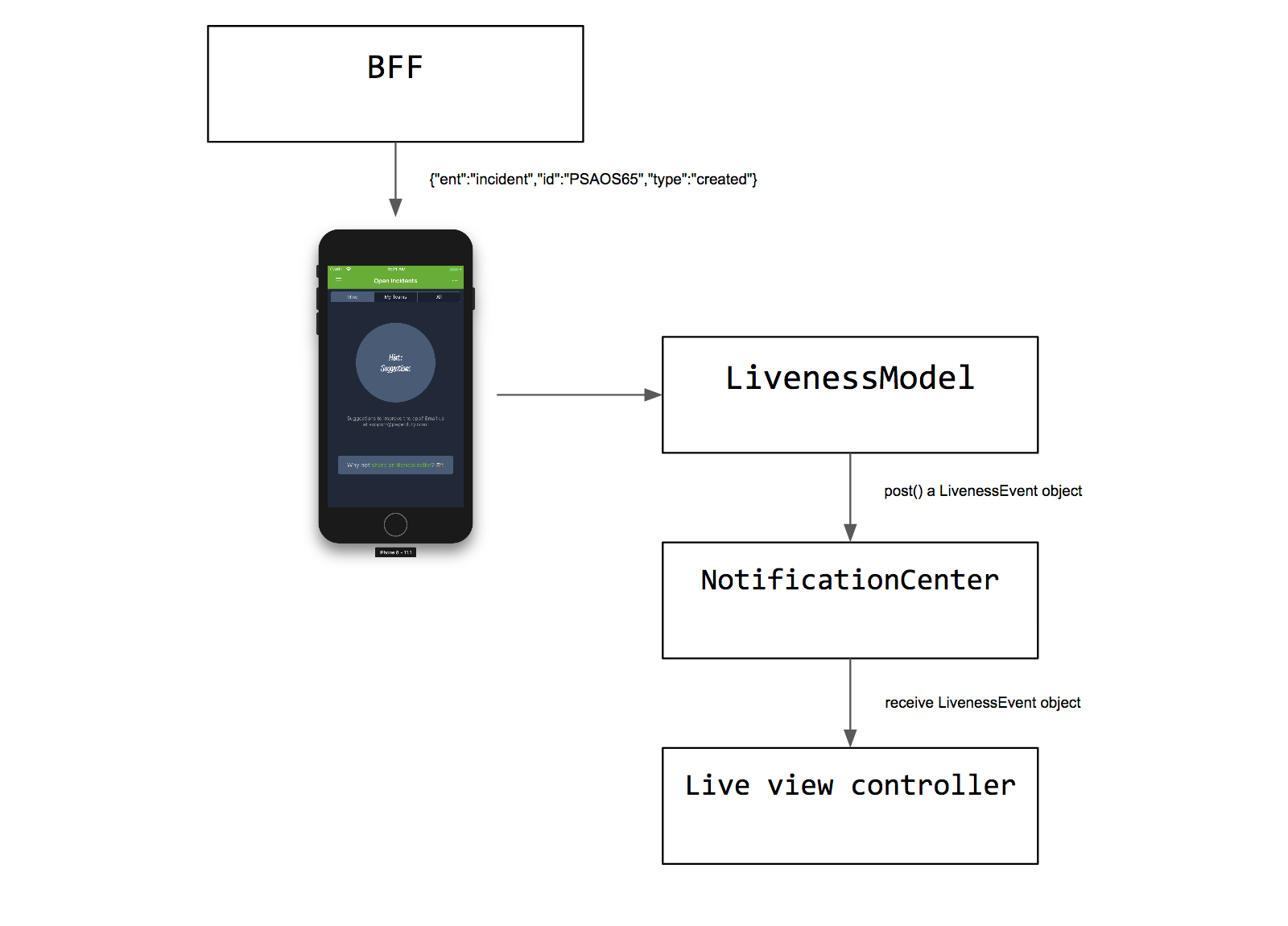

The AppDelegate owns the LivenessModel which maintains the WebSocket connection and handles incoming messages. We use the Reachability.swift library to make sure we aren’t trying to connect while you have no Internet connection.

The LivenessModel communicates to other parts of the app by posting messages to the NotificationCenter. We perform all our I/O on background threads to keep the UI snappy.

This allowed us to ship the code for live updating while keeping it locked behind a feature flag but not suffering the complexity of multiple branches of development. If you don’t have the feature flag enabled, you don’t have a LivenessModel, hence nothing is posting events to NotificationCenter and your live updating code just doesn’t fire.

After hooking it all up:

Android

We were already using OkHttp but an older version so we upgraded that. OkHttp has WebSockets built-in. It even supports auto-pinging of the server so it doesn’t get disconnected.

Similar to the iOS app, we close the socket when the app is backgrounded, and open it again when we return to the foreground. The Android app also uses exponential backoff.

To determine whether or not there is a network connection, we use a BroadcastReceiver that listens to the Android system.

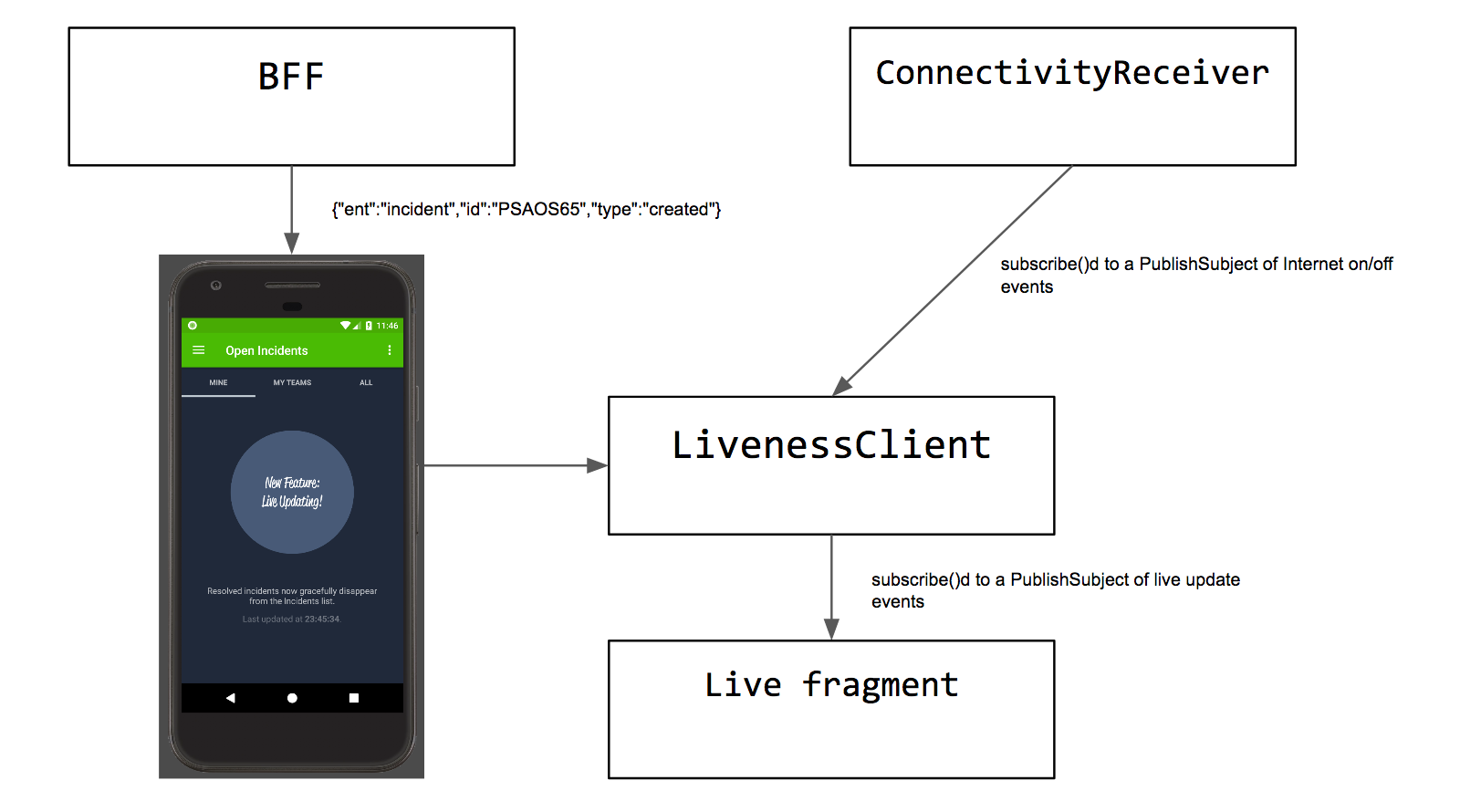

Since our Android app is based on RxJava, the various fragments that are interested in live updates can simply subscribe to a subject exposed by the LivenessClient, which is in turn owned by the Application. What’s interesting about this approach is that we are doing reactive programming all the way from the event’s source (the web application publishing to Kafka), all the way to the fragment that displays the update!

I love it when a plan comes together!

Optimizations and Tweaks

Given that our events are very thin (only an ID and an event type) the app doesn’t know if the incoming events are even applicable to what’s being displayed. We certainly don’t want to make a GET call to the server for every event, so to that end, we made several optimizations.

- API calls are only made then the UI is displaying the Incident Details or Open Incidents list.

- If an event comes in for

"type": "created", it’s ignored on the Incident Details screen. For Open Incidents, we have to retrieve the incident, unless for some reason we already have it in the list due to a delay in processing. - If an event comes in for

"type": "updated", it’s always processed on the Incident Details screen. But for Open Incidents, we do not have to retrieve the incident, unless we are currently displaying it in Incident Details, or we currently have it in any of the lists in Open Incidents (e.g. Mine/My Teams/All). - The Android app normally pops up a dialog when the app is in the foreground and a push notification arrives for one or more incidents. If Live Updating is enabled and you’re looking at that particular incident (or a list with that incident in it) we suppress the dialog… it’s just getting in your way, and chances are you already got the live update a few seconds before the notification.

Measuring Success

PagerDuty is a data-driven organization, and we don’t claim success without the numbers to back it up.

To begin with, months before we GA’d the feature, we began tracking analytics each time the Open Incidents or Incident Details views were pulled down to refresh. As we built the feature, we tracked every time a live update event caused it to automatically refresh.

The hypothesis is that as time goes on – and with a bit of prompting from our empty-state tips – users will realize they don’t have to pull-to-refresh anymore, and we will see a drop in the percentage of users who do so. We naturally expect to see a rise in automatic refresh events as it’s going to happen whether users expect it or not.

Conclusion

During this project we encountered significant technical challenges in delivering the data to the device in a data-efficient and timely way. Further, we also encountered unexpected rough edges in terms of presenting the data to users in the way that feels natural. Often we made changes in the days following sprint reviews. What is emerging through all of this refinement is a slick enhancement to a product that works the way a responder needs it to.